Welcome back to the System Design series! In this post, we will explore the concept of caching, a powerful technique used to enhance the speed and performance of applications. Caching plays a critical role in optimizing data retrieval and ensuring a smooth user experience.

What is Caching?

Caching is a technique where systems store frequently accessed data in a temporary storage area, known as a cache, to quickly serve future requests for the same data. This temporary storage is typically faster than accessing the original data source, making it ideal for improving system performance.

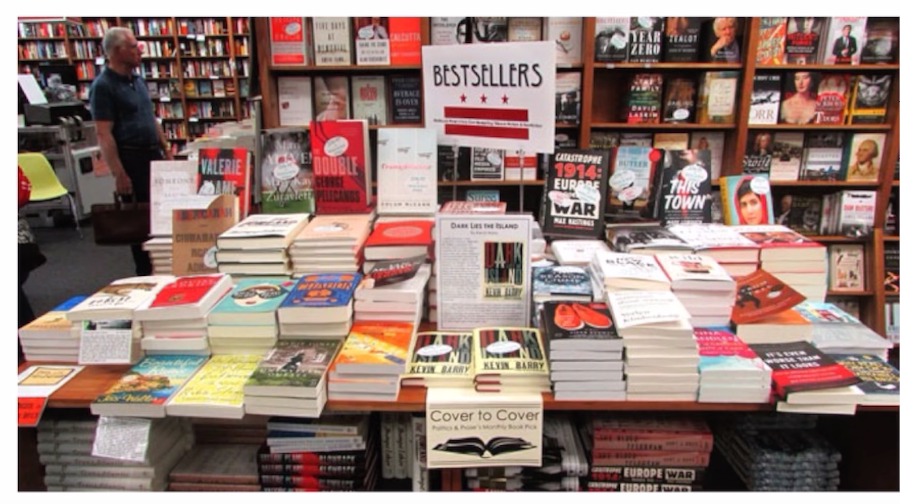

Real-World Example:

In our bookstore example, think of the “Bestseller” section near the entrance. This section contains the most popular books that customers frequently ask for. Instead of having customers search through the entire store, they can easily find bestsellers in one convenient location. By keeping these popular books readily available, the store improves customer satisfaction and speeds up the shopping process.

How it Relates to Web Applications:

In a web application, caching works similarly by storing frequently accessed data (like user profiles or product listings) in a cache memory. When a user requests this data, the application first checks the cache. If the system finds the data (a cache hit), it returns the data quickly, avoiding slower operations like database queries. If the system doesn’t find the data (a cache miss), it retrieves the data from the database, stores it in the cache for future use, and then returns it to the user.

Types of Cache

- Memory Cache:

- Definition: Cache stored in RAM, which is much faster than retrieving data from a disk or over a network.

- Examples: In-memory data stores like Redis and Memcached.

- Use Cases: Storing session data, user authentication tokens, and frequently accessed database queries.

- Disk Cache:

- Definition: Cache stored on a disk, typically slower than RAM but more persistent.

- Examples: Browser cache for storing web pages and images, operating system disk cache.

- Use Cases: Storing large media files, web pages, or data that doesn’t fit entirely in memory.

Cache Hit and Cache Miss

- Cache Hit: Occurs when the requested data is found in the cache. This results in faster data retrieval, improving the overall performance of the application.

- Cache Miss: Occurs when the requested data is not found in the cache, forcing the system to fetch it from the original data source. Cache misses are slower and can negatively impact performance.

Importance of Cache Hit/Miss Ratio:

To measure the effectiveness of a caching strategy, analysts often look at the cache hit/miss ratio. A high cache hit ratio indicates that the system serves most data requests from the cache, resulting in faster response times and reduced load on the backend servers. Conversely, a low cache hit ratio indicates that the cache is not effectively storing the needed data, resulting in more frequent cache misses and slower performance.

Cache Eviction Policies

Caches have limited storage capacity. When the cache is full, some data must be removed to make room for new data. This process is known as cache eviction. Several strategies are used for cache eviction:

- Random Eviction:

- Definition: Randomly selects and removes items from the cache to make space.

- Pros: Simple to implement.

- Cons: Not efficient, as important data might be evicted.

- FIFO (First-In-First-Out):

- Definition: Evicts the oldest items (those that entered the cache first).

- Pros: Easy to understand and implement.

- Cons: May remove frequently accessed data that entered the cache early.

- LFU (Least Frequently Used):

- Definition: Evicts the items accessed the least number of times.

- Pros: Keeps frequently accessed data in the cache.

- Cons: Requires tracking access frequency, which can add overhead.

- LRU (Least Recently Used):

- Definition: Evicts the items that have not been accessed for the longest time.

- Pros: Balances recency and frequency, commonly used and effective.

- Cons: Requires tracking the access order, which can add complexity.

Challenges of Caching

While caching offers significant performance benefits, it also comes with challenges:

- Staleness: Cached data can become outdated if the underlying data changes. Managing cache consistency and ensuring that users see the most recent data is crucial.

- Overhead: Caching requires memory and processing power. Choosing what to cache and managing the cache efficiently is critical to avoid excessive resource consumption.

- Consistency:Maintaining consistency between cached data and the original data source is essential, especially in distributed systems that involve multiple caches.

Example Challenges in Bookstore:

- Staleness: A bestseller is no longer popular, but it still takes up space in the front section.

- Overhead: Deciding how much space to allocate for the bestseller section without cluttering the store.

- Consistency: Ensuring that the bestseller section is updated regularly with the most popular books.

Example Challenges in Web Applications:

- Staleness: Showing outdated product prices or information due to stale cache data.

- Overhead: Allocating sufficient memory for caching without impacting the overall system performance.

- Consistency: Keeping cached user data in sync with the database to avoid showing incorrect information.

Video Explanation: